InfiniBand NDR OSFP Solutions

NVIDIA Quantum-2 InfiniBand for large-scale AI and cloud fabrics

Platform Overview

NVIDIA Quantum™-2 InfiniBand is the backbone for next-generation AI clusters and hyperscale data centers, pairing 400 Gb/s NDR connectivity with advanced telemetry and automation.

The second-generation fabric doubles port speed, triples switch port density, delivers 5× switch system capacity, and unlocks 32× collective acceleration versus the previous generation. With Dragonfly+ topology, Quantum-2 networks scale to over a million 400 Gb/s nodes in just three hops, enabling global AI workloads with deterministic latency.

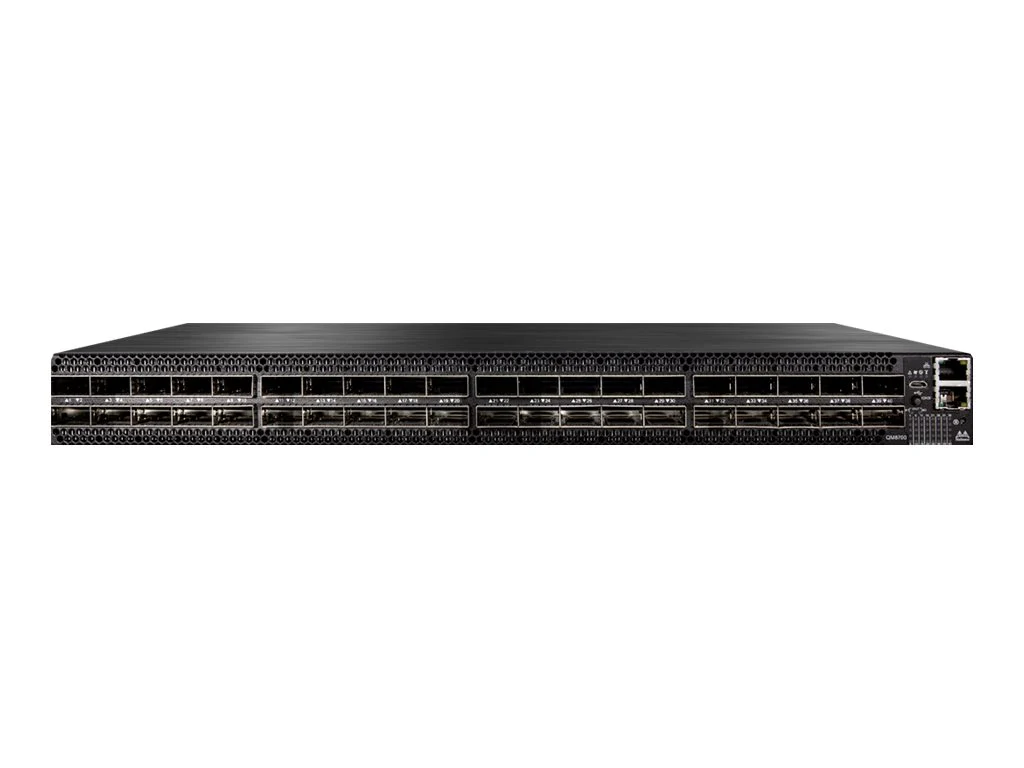

NVIDIA Quantum-2 InfiniBand Switch

Highlighted Models

Two principal models are available: MQM9790-NS2F (externally managed) and MQM9700-NS2F (integrated management). Both share identical port density and speed.

Key Specifications

- 64 × 400 Gb/s or 128 × 200 Gb/s InfiniBand ports

- 1U design with 32 OSFP cages on a single panel

- Per-port breakout to 2 × 400 Gb/s supported

- Flexible port-group configurations for leaf, spine, or Dragonfly+

NVIDIA SHARP™ Gen3

Built-in SHARP collective acceleration multiplies network compute capacity by 32×, enabling concurrent tenants and large-scale AI training with consistent performance.

Switch Highlights

- MPI_Alltoall and collective offload

- MPI tag matching hardware engines

- Dynamic adaptive routing

- Advanced congestion control

- Self-healing fabric telemetry

- Purpose-built for AI and HPC clusters

NVIDIA ConnectX-7 InfiniBand Adapter

ConnectX-7 adapters deliver 400 Gb/s throughput via a single OSFP cage, supporting PCIe Gen5 x16 (or Gen4 x16) host connectivity with hardware-accelerated networking.

Feature Highlights

- Bandwidth: 400 Gb/s per port with 100 Gb/s SerDes

- Form Factor: Single-port OSFP modules for adapters and GPUs

- Acceleration: Inline encryption, MPI offload, RoCE, GPUDirect®

- Compatibility: Works with Quantum-2 switches and BlueField DPUs

OSFP Thermal Considerations

Switch-side OSFP optics include integrated heat fins for airflow optimization, while adapters typically use flat-top OSFP modules. Match cooling profiles to chassis airflow.

NDR Optical Connectivity

NDR switch ports rely on OSFP modules with eight 100 Gb/s PAM4 lanes. This architecture supports multiple deployment patterns:

800G ↔ 800G

Direct Quantum-2 switch interconnect

800G ↔ 2 × 400G

Split to adapters or DPUs

800G ↔ 4 × 200G

Backwards compatibility to HDR systems

FS 800G/400G cables and transceivers cover distances up to 2 km, streamlining high-density deployments for training clusters and data centers.

800G NDR Connection Options

FS OSFP-SR8-800G

Dual-port 2 × 400 Gb/s multimode modules based on 100G-PAM4. Two MPO-12/APC trunks extend reach to 50 m for TOR/leaf connections.

Reference Topologies

1. Switch-to-Switch 800G

Deploy two OSFP-DR8-800G optics and MPO-12/APC OM4 cables to link Quantum-2 switches at full 800 Gb/s.

2. Switch-to-Adapter 800G

Use OSFP-SR8-800G modules with straight MPO cabling to feed up to two ConnectX-7 adapters or BlueField DPUs concurrently.

Passive Breakout Cables

For cost-optimized short-reach deployments, OSFP-to-4×QSFP112 copper DACs and active DACs bridge Quantum-2 switches to legacy ConnectX-6 adapters at 200 Gb/s per port. These cables support up to 3 meters and maintain interoperability across mixed-generation fabrics.

MTP Fiber Assemblies

MTP-12 APC to MTP-12 APC OM4 trunk cables with 8 active fibers (4 transmit, 4 receive) are recommended for OSFP SR8 transceivers. APC polishing minimizes return loss and preserves signal integrity for PAM4 modulation.

Qualification & Testing

FS performs extensive validation on NDR InfiniBand optics and cables to guarantee reliability.

Optical Spectrum

Verifies center wavelength, SMSR, and spectral width for optimal transmission.

Eye Diagram

Analyzes eye height and width of PAM4 signals to ensure clean signaling.

BER Testing

Maintains low bit error rates for mission-critical workloads.

Thermal Cycling

Validates stability under extreme temperature swings for long-term durability.

Summary

With deep expertise in InfiniBand networking, FS delivers end-to-end solutions covering switches, adapters, optics, copper assemblies, and validation services. Our portfolios empower data centers, HPC clusters, edge computing, and AI platforms with balanced performance and cost efficiency.

Contact our team to design a future-proof InfiniBand NDR architecture that accelerates your business outcomes.