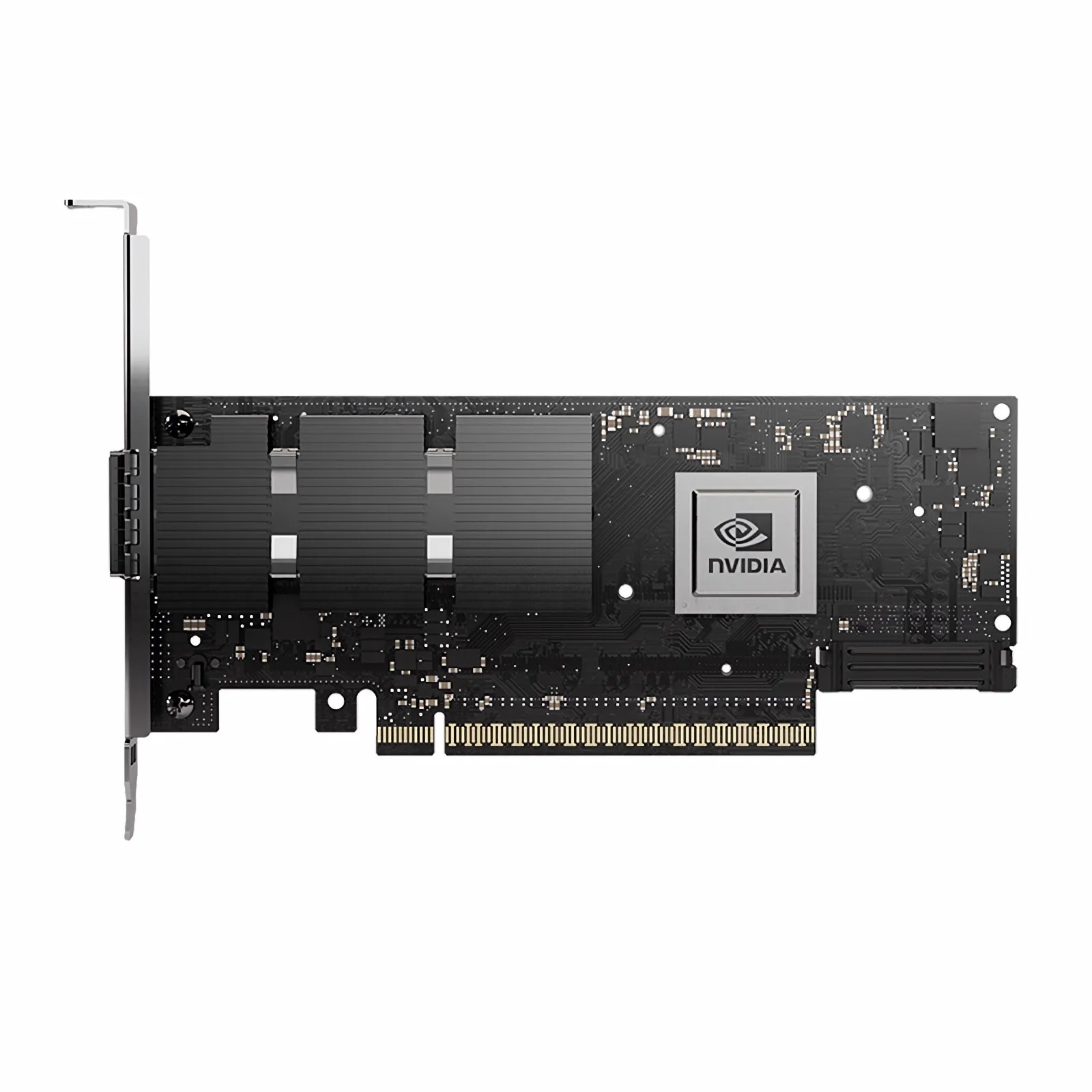

NVIDIA CONNECTX-7

InfiniBand Network Computing Adapter

Harness Network Computing for Data-Driven Science

As a cornerstone of the NVIDIA® Quantum-2 InfiniBand platform, the NVIDIA ConnectX®-7 network computing adapter (HCA) delivers exceptional performance to address the world’s most demanding challenges. With ultra-low latency, up to 400 Gb/s throughput, and next-generation NVIDIA in-network acceleration engines, ConnectX-7 equips AI training clusters, high-performance computing (HPC) installations, and hyperscale cloud data centers with the scalability and intelligence they require.

ConnectX-7 extends the AI revolution and the era of software-defined science by pairing industry-leading bandwidth with network compute offloads. Hardware acceleration for collectives, MPI tag matching, MPI_Alltoall, and rendezvous protocols ensures outstanding performance and scalability for compute- and data-intensive applications while preserving investment protection through backward compatibility.

Product Portfolio

- Single-port or dual-port adapters delivering 400 Gb/s or 200 Gb/s per port with OSFP connectors.

- Dual-port 200 Gb/s configurations with QSFP connectors for broad ecosystem compatibility.

- PCIe HHHL cards with NVIDIA Socket Direct™ options for maximum host bandwidth.

- OCP 3.0 TSFF and SFF cards for modern modular servers.

- Standalone ConnectX-7 ASIC supporting PCIe switch capabilities for custom designs.

Key Features

InfiniBand Capabilities

- • Compliant with InfiniBand Trade Association (IBTA) specification 1.5.

- • Up to four InfiniBand ports with hardware congestion control.

- • Remote Direct Memory Access (RDMA) send/receive semantics.

- • Atomic operations and 16 million I/O channels.

- • MTU support from 256 bytes to 4 KB with message sizes up to 2 GB.

- • Eight virtual lanes (VL0–VL7) plus VL15 for management.

Enhanced Networking

- • Hardware-based reliable transport with lossless delivery.

- • Extended Reliable Connected (XRC) transport support.

- • Dynamic Connected Transport (DCT) for scalable fabrics.

- • NVIDIA GPUDirect® RDMA and GPUDirect Storage enablement.

- • Out-of-order RDMA supporting adaptive routing schemes.

Network Computing Engines

- • Collective acceleration offload for large-scale reductions.

- • Vector collective operations to optimize AI workloads.

- • MPI Tag Matching and MPI_Alltoall offload.

- • Rendezvous protocol acceleration for sizable messages.

- • In-network memory services to minimize data movement.

Compatibility

- • PCIe 5.0 compliant with up to 32 lanes.

- • Supports PCIe x1, x2, x4, x8, and x16 configurations.

- • NVIDIA Multi-Host™ connectivity for up to eight hosts.

- • PCIe Atomic operations, TLP processing hints (TPH), and DPC.

- • SR-IOV virtualization for multi-tenant environments.

- • Operating system support: Linux (RHEL, Ubuntu), Windows, VMware, Kubernetes, OFED, WinOF-2.

| Key Specifications | |

|---|---|

| Maximum Aggregate Bandwidth | 400 Gb/s |

| InfiniBand Compliance | IBTA 1.5 |

| Network Ports | 1 / 2 / 4 InfiniBand ports |

| Host Interface | PCIe 5.0, up to x32 lanes |

| RDMA Message Rate | 330–370 million messages per second |

| Acceleration Engines | Collectives, MPI Tag Matching, MPI_Alltoall |

| Advanced Storage | Inline encryption & checksum offload |

| Precision Timing | PTP 1588v2 with 16 ns accuracy |

| Security | On-die secure boot with hardware root of trust |

| Management | NC-SI, MCTP over SMBus & PCIe |

| Supported OS | Linux, Windows, VMware |

| Form Factors | PCIe HHHL, Socket Direct, OCP 3.0 TSFF/SFF |

PCIe Adapter Portfolio & Ordering

Select from the most common NVIDIA ConnectX-7 configurations. Contact Glocal Storage for thermal profiles, bracket options, or regional inventory forecasts.

| InfiniBand Speed | Ports & Connector | Host Interface | Form Factor | Orderable Part Number (OPN) |

|---|---|---|---|---|

| Up to 400 Gb/s | 1 × OSFP | PCIe 4.0/5.0 x16 with expansion option | HHHL | MCX75510AAS-NEAT |

| Up to 400 Gb/s | 1 × OSFP | PCIe 4.0/5.0 x16 | HHHL | MCX75310AAS-NEAT |

| Up to 400 Gb/s | 1 × OSFP | PCIe 4.0/5.0 (2×8 sharing a slot) | HHHL | MCX75210AAS-NEAT |

| Up to 200 Gb/s | 1 × OSFP | PCIe 4.0/5.0 x16 with expansion option | HHHL | MCX75510AAS-HEAT |

| Up to 200 Gb/s | 1 × OSFP | PCIe 4.0/5.0 x16 | HHHL | MCX75310AAS-HEAT |

| Up to 200 Gb/s | 1 × OSFP | PCIe 4.0/5.0 (2×8 sharing a slot) | HHHL | MCX75210AAS-HEAT |

| Up to 200 Gb/s | 1 × QSFP | PCIe 4.0/5.0 x16 with expansion option | HHHL | MCX755105AS-HEAT |

| Up to 200 Gb/s | 2 × QSFP | PCIe 4.0/5.0 x16 with expansion option | HHHL | MCX755106AS-HEAT |

Dimensions without bracket: 167.65 mm × 68.90 mm. All adapters ship with tall brackets installed and include a low-profile bracket. Secure boot with hardware root of trust is enabled on every OPN.

Auxiliary Board Kits (Passive) for Dual PCIe 4.0 x16 Expansion

| Description | Orderable Part Number (OPN) |

|---|---|

| 15 cm harness kit | MTMK9100-T15 |

| 35 cm harness kit | MTMK9100-T35 |

| 55 cm harness kit | MTMK9100-T55 |

OCP 3.0 Small Form Factor Adapters

Default bracket types are indicated by the final character in each OPN (B/C = pull tab, 1/J = internal latch, E/F = ejector latch). Alternative bracket options are available via special order.

| InfiniBand Speed | Ports & Connector | Host Interface | Form Factor | Orderable Part Number (OPN) |

|---|---|---|---|---|

| Up to 400 Gb/s | 1 × OSFP | PCIe 4.0/5.0 x16 | TSFF | MCX75343AAS-NEAC |

| Up to 200 Gb/s | 2 × QSFP | PCIe 4.0/5.0 x16 | SFF | MCX753436AS-HEAB |

Note: Compliant with OCP 3.2 specifications. Configure P1x alignment as required.

ConnectX-7 ASIC Ordering Options

| Product Description | Orderable Part Number (OPN) |

|---|---|

| ConnectX-7 dual-port IC, 400 Gb/s, PCIe 5.0 x32, no encryption | MT29108A0-NCCF-NV |

| ConnectX-7 dual-port IC, 400 Gb/s, Multi-Host, PCIe 5.0 x32, no encryption | MT29108A0-NCCF-NVM |

| ConnectX-7 dual-port IC, 400 Gb/s, Multi-Host, PCIe 5.0 x32, with encryption | MT29108A0-CCCF-NVM |