Mellanox Quantum-2 MQM9790 64-port Non-blocking NDR 400 Gb/s InfiniBand Switch

Enterprise-grade 400 Gb/s InfiniBand switch purpose-built for AI training clusters, high-performance computing, and large data centers seeking non-blocking, low-latency fabrics.

- 64-port non-blocking architecture delivering 25.6 Tbps switching capacity.

- 32 OSFP cages with flexible 400 Gb/s expansion for north-south and east-west traffic.

- Unmanaged, plug-and-play design that streamlines deployment and operations.

- P2C airflow option engineered for dense rack integration and efficient cooling.

- Certified to CB, CE, FCC, VCCI, and RoHS standards for global readiness.

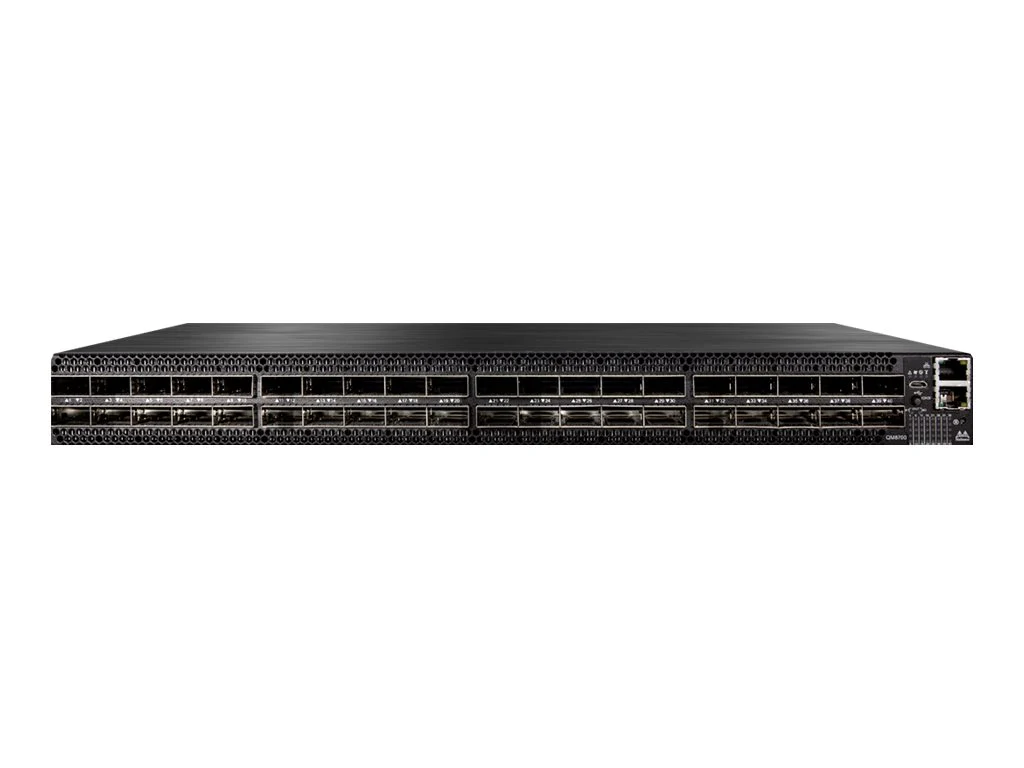

Front perspective showcasing 64 OSFP ports and status indicators.

Rear view highlighting redundant power supplies and cooling modules.

Side profile illustrating the compact 1U rack-mounted design.

Need additional product photography or accessory shots? Contact our sales engineers for the full media kit.

Product Overview

The Mellanox Quantum-2 MQM9790 64-port Non-blocking Unmanaged NDR 400 Gb/s InfiniBand Switch (Part ID: MQM9790-NS2F) empowers modern hyperscale data centers and AI/HPC environments with next-generation NVIDIA Mellanox Quantum™-2 silicon. It delivers deterministic, ultra-low latency fabric performance while maintaining non-blocking connectivity for the most demanding workloads.

With 32 OSFP cages supporting flexible breakout, the MQM9790 adapts to expansion needs across cloud providers, supercomputing sites, and research institutions. Optional front-to-back or back-to-front airflow, hot-swappable modules, and redundant power ensure resilient 24/7 operations in dense racks.

Comprehensive global certifications—including CB, cTUVus, CE, FCC, VCCI, and RoHS—ensure compliance across regions, while rugged environmental tolerances support high-altitude deployments. Its unmanaged architecture minimizes operational complexity, providing predictable performance for large-scale fabrics.

Switching Cap.

25.6 Tbps

Non-blocking switching fabric designed for horizontal and vertical scaling.

Port Density

32 OSFP

High-density OSFP ports with multi-rate compatibility for future upgrades.

Per Port Speed

400 Gb/s

Compatible with 40/56/100/200/400 Gb/s links for versatile integration.

Services

Full Lifecycle

Planning, deployment, optimization, and training services from Glocal Storage.

- Enterprise Reliability: Redundant power and fans with hot-swap modules guarantee continuous operation.

- AI/HPC Optimized: Tuned for GPU-to-GPU traffic with ultra-low latency and congestion control.

- Global Certifications: Meets CB, CE, FCC, and RoHS compliance for worldwide deployment.

- Dense Footprint: Compact 1U chassis conserves rack space in expansion projects.

- Flexible Deployment: Built for cloud service providers, hyperscale operators, and research facilities.

- Advanced Management: Supports SNMP, CLI, web UI, NVIDIA UFM, and RESTful APIs.

- Energy Efficient: Intelligent fan control and power management reduce operational costs.

- Multi-speed Support: Backward compatibility with HDR/EDR fabrics protects investments.

Technical Specifications

| Model | NVIDIA Mellanox MQM9790-NS2F |

|---|---|

| Brand | Mellanox |

| Port Configuration | 64 × 400 Gb/s NDR InfiniBand ports, 32 OSFP cages |

| Switching Capacity | 25.6 Tbps |

| Dimensions | 17.2" (W) × 1.7" (H) × 26" (D) / 438 mm × 43.6 mm × 660 mm |

| Mounting | Standard 19-inch rack |

| Operating Temperature | P2C airflow: 0°C to 35°C; C2P airflow: 0°C to 40°C |

| Storage Temperature | -40°C to 70°C |

| Operating Humidity | 10%–85% non-condensing |

| Storage Humidity | 10%–90% non-condensing |

| Altitude | Operational up to 3,050 meters |

| Airflow Options | P2C (front-to-back) / C2P (back-to-front) |

| Input Power | 1×/2× 200–240 VAC, 10 A, 50/60 Hz |

| Maximum Power | 1,610 W (with active cables) |

| Typical Power | 640 W (with passive cables, ATIS) |

| Safety Certifications | CB, cTUVus, CE, CU, S-Mark |

| EMC Certifications | CE, FCC, VCCI, ICES, RCM, CQC, BSMI, KCC, TEC, ANATEL |

| RoHS Compliance | RoHS compliant |

| Management Features | CLI, SNMP, web management, NVIDIA Cumulus Linux support |

| Embedded Processor | Intel® Core™ i3 Coffee Lake (QM9700 series only) |

Use Cases

Cloud Data Centers

Delivers high-performance, low-latency connectivity for multi-tenant and virtualized platforms.

AI/ML Training Clusters

Optimized for GPU workloads, sustaining massive bandwidth and consistent latency.

High-Performance Computing

Enables scientific research, simulations, and supercomputing projects with predictable fabric performance.

Frequently Asked Questions

Need more information?

Our technical experts are ready to help you evaluate configurations, deployment plans, and long-term support options.

1 Fullerton Road #02-01, One Fullerton, Singapore